Report Writing

7 AI Tools for Systematic Reviews (Finish in 5 Hours)

AI Tools for Systematic Literature Review help finish studies in 5 hours. Otio streamlines screening, summarizing, and drafting to cut tedious work.

Feb 17, 2026

Systematic reviews traditionally require extensive hours of sifting through research articles, screening abstracts, and manually extracting data. Advanced AI tools can compress this labor-intensive process into hours without compromising academic rigor. Using the most effective AI for report writing can enhance both accuracy and efficiency, enabling researchers to focus on critical analysis.

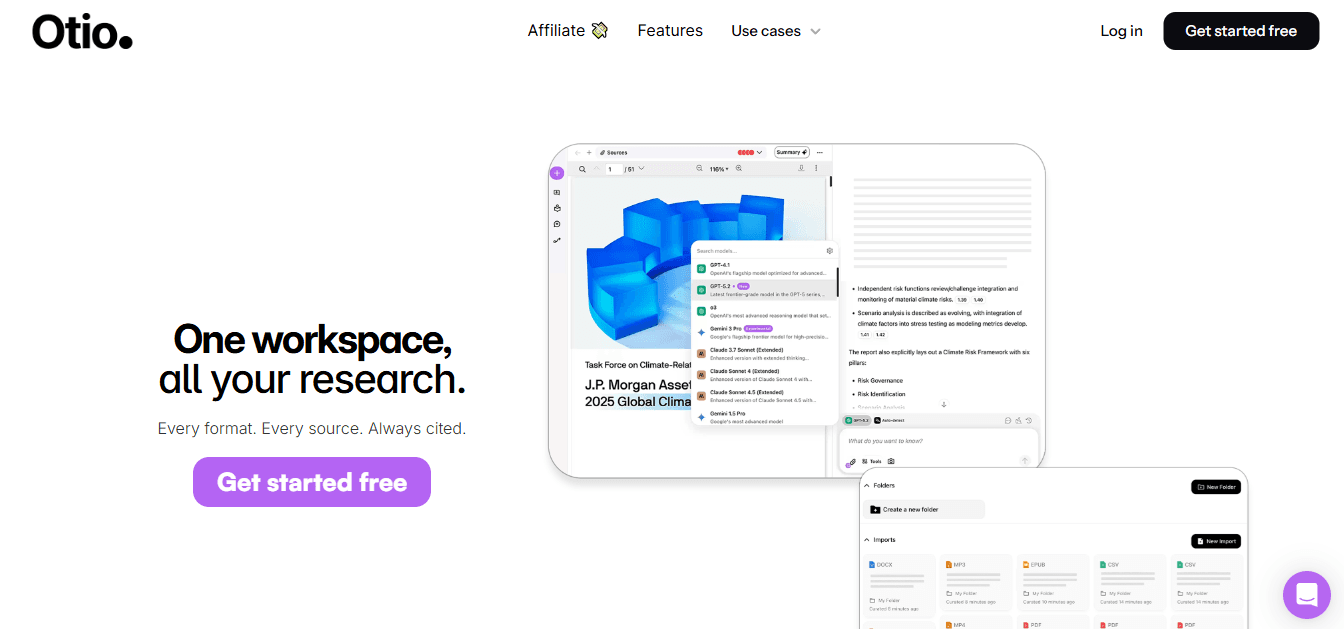

A consolidated workflow that integrates literature screening, data extraction, and synthesis improves clarity and accelerates progress. Streamlined processes enable researchers to avoid repetitive tasks while maintaining essential quality standards. An AI research and writing partner, such as Otio, integrates all stages of a systematic review into a single, efficient platform.

Summary

Systematic reviews typically take 18 months to complete, according to BMC Medical Research Methodology, not because researchers are slow, but because the process is inherently fragmented. Screening 200 abstracts manually consumes three to six hours before deep reading even begins, and researchers spend up to 40% of their time on manual literature review tasks like context switching between scattered tools. The timeline problem is a workflow design issue, not an issue of intellectual capacity.

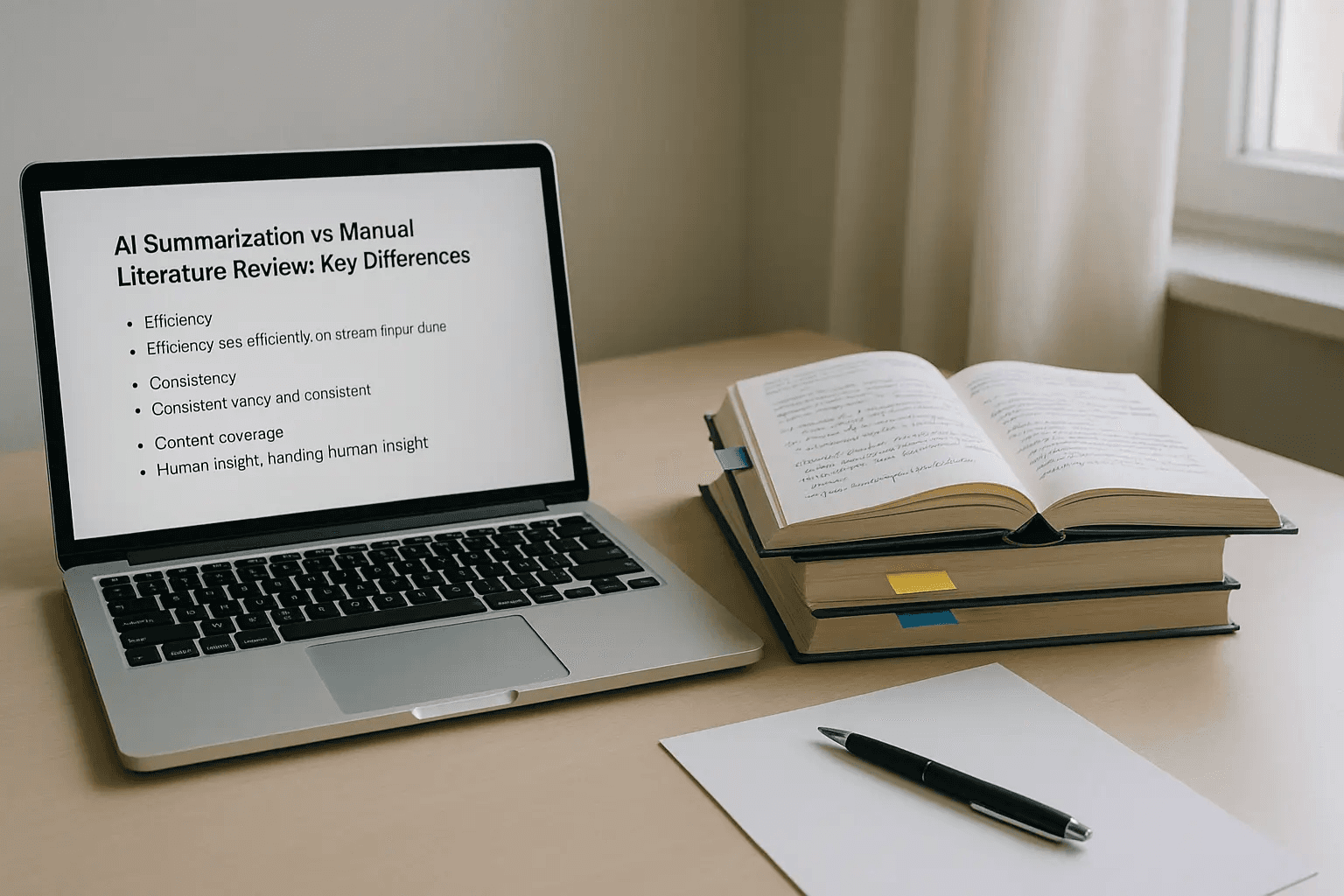

AI-assisted screening systems can reduce review time by 30-50% while maintaining comparable inclusion accuracy in pilot studies. Manual abstract screening scales poorly because each paper requires one to three minutes of careful assessment, multiplying into hours of repetitive filtering work. The rigor remains intact: AI handles prioritization, and researchers approve final decisions, compressing what used to take half a day to 90 minutes.

Workers who use AI can boost productivity by up to 40 percent, according to workplace adoption studies, and this pattern holds in research contexts when tools remove administrative burden rather than analytical depth. The time saved comes from eliminating summary rewriting (60-90 minutes), screening duplication (90 minutes), discovery friction (45 minutes), and late-stage reorganization (30 minutes). These aren't intellectual shortcuts but coordination efficiencies that preserve academic standards while collapsing timelines from weeks to hours.

Fragmentation increases cognitive load because switching among PDF readers, note-taking apps, and citation managers requires the brain to continually reconstruct context. Researchers lose 20 to 30 percent more time during synthesis simply recovering mental context after each tool switch, which adds two extra hours to an 80-article review without producing any new analysis. The fatigue isn't from thinking harder but from coordinating scattered information across disconnected platforms.

Thematic clustering before full reading prevents late-stage restructuring, which can add days to review timelines. Most researchers organize sources by author or year, then discover conceptual patterns only after reading everything twice and manually regrouping insights. Structuring by theme upfront surfaces contradictions, consensus points, and research gaps early, turning the final draft into an assembly process rather than a discovery expedition that requires constant backtracking.

Otio addresses this by consolidating PDFs, videos, and web links into a single workspace, where AI generates summaries, extracts findings, and enables cross-source querying without toggling between tools.

Table of Contents

Why Systematic Reviews Usually Take Weeks

Systematic literature reviews can take weeks or even months. This is not because researchers are slow; rather, the process is messy, manual, and highly cognitively demanding. The required time depends on the configuration of the setup. Most researchers spend less time thinking and more time organizing, screening, cross-checking, and rewriting. A typical systematic review may involve 100 to 500 abstracts, numerous inclusion/exclusion criteria, manual tagging, and duplicate removal. Each abstract takes one to three minutes to review. With 200 papers, that means three to six hours just for the first screening. This is before any deep reading starts.

Why do researchers accept that reviews take weeks?

Many researchers believe that reviews "naturally take weeks" because the screening stage often feels like a big project. According to the BMC Medical Research Methodology, the average time to finish a systematic review is 18 months. While some of this time is affected by organizational factors, most is due to workflow friction.

What does a common workflow look like?

A common workflow typically uses multiple tools: PDFs are saved in folders, notes are written in Word, highlights are made in a PDF reader, citations are managed in Zotero, and eligibility is tracked in spreadsheets. Each time you switch between tools, you add friction to the process. Research in cognitive science indicates that task switching takes more time and causes mental fatigue. Even brief switching periods can add up over several hours.

How does a fragmented organization slow down synthesis?

When notes are in one place and PDFs in another, it slows down how we put things together because the brain has to switch between them. This delay isn't about intelligence; it's about how things are arranged. One researcher described it as playing Tetris with my own thoughts, except thatthe pieces are in different rooms.

How does repetition in the review process contribute to time spent?

Once papers are selected, researchers often return to the PDFs, re-highlight key sections, manually copy findings, and rephrase them into summary notes. This repetition can double the time spent on the review process. For example, a master's student reviewing 40 articles spends two to three hours screening, six to eight hours reading, and four to five hours rewriting summaries. The rewriting stage alone becomes a hidden second pass, and that is where most of the fatigue sets in.

Can modern platforms improve the review process?

Platforms such as Otio integrate this workflow. They enable users to chat with their documents, create summaries, and extract insights without reopening files or switching between applications. Instead of switching among PDF readers, note-taking apps, and citation managers, users can work in a single workspace. Here, AI is based on its own sources. This change makes the process feel like a conversation, not just a way of copying information.

Why do researchers often delay structuring their reviews?

Many researchers delay structuring their reviews until they have gathered insights. They usually collect all the information first and organize it later. This can lead them to realize they have gaps and go back to search again. This process may take an additional several days. A careful approach is important because systematic reviews must be thorough. Missing even one important study can greatly lower credibility.

How can AI-assisted screening reduce review time?

The belief that thorough equals slow is understandable, as academic standards often require precision. However, modern workflows have changed. Studies on AI-assisted screening in evidence synthesis show that machine-assisted abstract screening can significantly reduce review time while maintaining accuracy in pilot tests. The process can remain rigorous while becoming more organized.

What misconceptions do researchers have about systematic reviews?

Researchers often think that systematic means exhaustive, and that exhaustive means time-consuming. As a result, they associate slow processes with accuracy. This assumption makes sense, as academic standards require precision. However, workflow design has changed a lot. The delays researchers experience are not due to their intelligence or effort; instead, they come from how the work is organized.

What are the key inefficiencies in the review process?

The screening process is manual, which causes delays. Sources are often scattered, making it difficult to put everything together smoothly. There can be significant delays in the process structure. Also, any changes to the workflow affect the overall timeline. Although tools can speed up this process without sacrificing rigor, the real challenge is whether researchers can identify thebottlenecks.

What do researchers underestimate about the cost of the process?

Understanding the timeline is important, but it does not capture the real cost. Most researchers tend to underestimate what they are truly losing in the process.

Related Reading

The Hidden Cost of Manual Literature Review Workflows

Manual systematic review workflows don't just take longer; they also silently increase cognitive load, delay publication timelines, and reduce analytical clarity. The cost isn't just time; it also includes mental fatigue, lost opportunities, and decision friction. Let's unpack what that really means. Most researchers manage their work this way: PDFs stored in folders, notes written in Word, citations managed separately, and screening tracked in spreadsheets. Each time researchers switch between these tools, their brains must reorient to the context, recall criteria, and rescan previous notes. According to JAMS Blog's analysis of manual workflows in academic publishing, researchers spend up to 40% of their time on manual literature review tasks. That time isn't dedicated to thinking; rather, it's spent on context recovery.

How does fragmentation affect literature reviews?

In systematic reviews, fragmentation can add 20-30% more time to the synthesis phase. This occurs because researchers must mentally reconstruct context each time they shift focus. For example, when a researcher reviews 80 articles and spends four hours reading, they may need an additional two hours to organize insights due to the notes being scattered. This extra time spent is not analysis; it is logistics.

Is manual screening truly safer and more rigorous?

Many researchers believe that careful manual screening is safer and more thorough. This makes sense because systematic reviews require precision. Missing even one relevant study could greatly weaken the conclusions. However, manual screening can be challenging to manage as data volumes grow. For example, if screening 200 abstracts takes one to two minutes per abstract, this adds up to at least three to six hours, not counting duplicates or rechecking. Evidence synthesis studies show that AI-assisted screening systems can significantly reduce screening time while maintaining comparable accuracy, including studies in pilot studies. Some systems have reported 30-50% time savings in early-stage screening.

Why does manual coordination expand timelines?

When researchers collect everything first and assemble it later, they often have to revisit the same papers multiple times, rewrite notes repeatedly, and identify gaps late in the process. This method causes timeline expansion. Academic project reports frequently note that systematic reviews can take months. This delay primarily stems from iterative manual processing and revisiting earlier steps. The slow part isn't the thinking work itself; it is the manual coordination.

How do platforms like Otio improve workflow?

Platforms like Otio bring everything together by letting users chat with documents in many formats: PDFs, videos, and web links, within one single workspace. Instead of switching between different tools, users can have a conversation with AI that is based on their own sources, rather than depending on ordinary answers from separate chatbots.

What are the implications of slow reviews?

For postgraduate researchers, slow reviews can delay proposal approval, ethics submissions, journal submissions, and funding applications. For industry researchers, these delays negatively affect product decisions, policy implementation, and competitive positioning. A one-week delay can significantly push back an entire research timeline. The hidden cost isn't just the time spent; it's also the time lost that can affect future opportunities.

How does manual work drain cognitive energy?

Manual workflows not only take more time, but they also drain cognitive energy. When researchers spend hours organizing files and copying notes, they have less cognitive room to identify gaps in themes, spot contradictions, and frame arguments clearly. Olio Health's research on manual workflows found that they quietly drain time, money, and morale in healthcare. This same issue also shows up in research settings. Fatigue can significantly reduce the quality of synthesis, and systematic reviews are judged on synthesis rather than file management.

What concerns persist regarding automation?

Concerns persist due to several factors. Academic training emphasizes caution, as researchers fear that automation may miss nuance in complex tasks. Previous tools often failed to integrate effectively. Early automation systems were frequently unreliable. These concerns were indeed valid in earlier workflows.

What advantages do modern AI tools offer?

Modern AI tools for literature reviews support structured summarization, duplicate detection, thematic clustering, and rapid source retrieval. This maintains a strict workflow while also making it more organized. Staying manual requires 30 to 50 percent more time during screening, leading to more hours spentswitching between tasks, delayed project milestones, increased mental fatigue, and less clear synthesis. The problem isn't the complexity of research but the need for improved workflow design to streamline your processes.

What is the future of AI in literature reviews?

The question now isn't whether AI can help, but which tools actually deliver on that promise without complicating matters.

Related Reading

Best Cloud-based Document Generation Platforms

Top Tools For Generating Equity Research Reports

Using Ai For How To Do A Competitive Analysis

How Create Effective Document Templates

Best Ai For Document Generation

Ai Tools For Systematic Literature Review

7 AI Tools That Cut Review Time to 5 Hours

Completing a systematic review in 5 hours is possible when every part of the workflow is carefully automated. The aim is not to eliminate critical thinking; rather, it is to remove repetitive tasks such as coordination, screening, summarizing, and restructuring, which typically take days. Below are 7 AI tools, each aligned with the specific tasks they automate and the realistic time savings they deliver. Scattered PDFs, handwritten rewriting notes, and copy-and-paste summaries complicate the process, often requiring repeated reopening of documents. These are not problems of thinking; they are problems of coordination.

1. Otio: Centralize, Summarize, and Draft in One Workspace

Otio brings together information by allowing users to upload articles, PDFs, YouTube videos, or web links in one place. It generates structured summaries, extracts key points, and lets users chat across all sources simultaneously. The AI is based on your sources rather than providing general answers from separate chatbots. Rather than spending time reading, highlighting, rewriting, and organizing information by hand, users can just upload their materials. Otio will automatically summarize, organize themes, and help draft sections. This method saves 60 to 90 minutes on summary rewriting alone. A PhD student reviewing 40 articles previously spent four hours rewriting highlights into structured notes. With AI-generated summaries, that time dropped to 1.5 hours while keeping the same quality and reducing duplication.

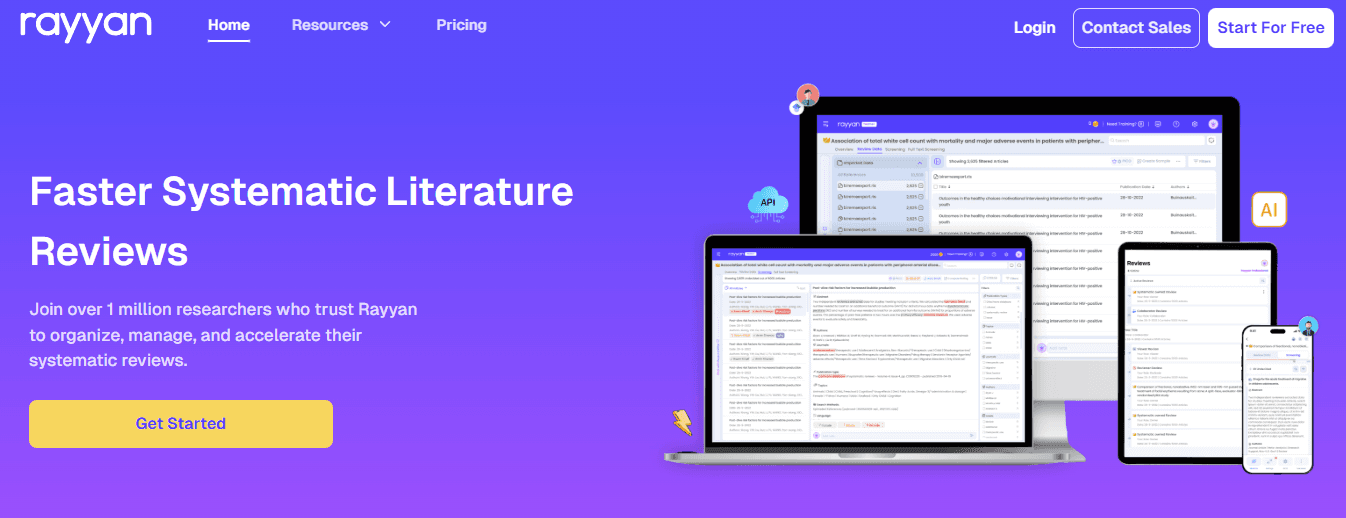

2. Rayyan: AI-Assisted Abstract Screening

Manual tasks such as abstract tagging, duplicate checking, and first-pass elimination can be tiring, even for experienced researchers. Each abstract needs one to three minutes for a careful review. Reviewing 200 papers adds up to three to six hours of screening alone. Rayyan uses AI to predict the likelihood that a paper will be included based on specific criteria. Users still approve the decisions, but the system highlights the most relevant papers. According to research published in BMC Medical Research Methodology, AI-assisted screening can reduce review time by 30-50% in pilot systematic reviews.

What is the impact of AI-assisted filtering?

If 3 hours were spent screening 200 abstracts by hand, AI-assisted filtering can reduce that time to 1.5-2 hours. Decision-making remains yours, while the tedious filtering process is much faster. Conducting manual citation tracing, repeated Google Scholar searches, and keyword searches take hours that could be better spent on analyzing important content. The problem isn't just finding papers; it's identifying which papers are the right ones without opening 30 tabs. With Otio, our streamlined processes help researchers focus on what truly matters, making it easier to filter and analyze their data efficiently.

3. ResearchRabbit: Discover Related Studies Faster

ResearchRabbit displays related research networks and recommends relevant papers based on thestarting articles. Instead of checking references for each article individually, the system quickly maps connections. This helps researchers save 30 to 45 minutes when conducting literature reviews. Guessing in theme grouping and manual conceptual mapping can significantly slow down the organization of the structure. Researchers may understand that the papers are connected, but they can't see the hidden pattern until they've read everything twice.

4. Connected Papers: Concept Clustering

Connected Papers groups papers by similar ideas, helping users find important studies, related studies, and new topics. This setup can save 20 to 30 minutes of organizing time, reducing the need for later fixes. Manual tagging, folder organization, and metadata cleanup again create an administrative burden. Although these tasks are not difficult to think about, they are often tedious and time-consuming. To streamline this process, consider how our product streamlines organization to improve efficiency.

5. Zotero with AI Tagging Plugins: Automated Organization

Zotero, along with AI tagging plugins, automatically extracts citation metadata and tags key themes. This can save you 20 to 40 minutes on a medium-sized review, reducing busy work and making the analysis clearer. For more insights, check out our article on analytical clarity. Reading whole PDFs for basic context and looking up citation metrics by hand creates extra delays before you even know if a paper is worth a close read.

6. Semantic Scholar: Fast Insight Extraction

Semantic Scholar provides structured paper summaries, citation context, and influence indicators. This can save 15 to 25 minutes per discovery round, accelerating the evaluation process before deep reading. The manual breakdown of methodology and findings across multiple papers is boring and not very useful. Rewriting abstracts does not provide new insights; it just rearranges information that has already been processed.

7. Scholarcy: Structured Paper Summaries

Scholarcy quickly extracts structured insights from individual papers, saving 20 to 30 minutes when reviewing 10 to 15 papers. This ability is helpful for rapid synthesis. To measure conservatively: Summary rewriting saves 60 minutes, screening saves 90 minutes, discovery saves 45 minutes, organization saves 40 minutes, and structural grouping saves 30 minutes.

How much time can these tools save?

These tools can save roughly 4 to 5 hours in the process. Importantly, they do not compromise academic rigor; instead, they eliminate administrative drag. According to Mashable's analysis of workplace AI adoption, 40% of American workers now use AI in their jobs. Workers who use AI can increase their productivity by upwards of 40 percent. This trend is also evident in research contexts: available tools are effective, but workflow design determines whether they shorten timelines or complicate them. Knowing which tools are available is just the first step; it does not explain how they fit together into a smooth, unified workflow.

The 5-Hour Systematic Review Workflow

A systematic review can realistically be completed in 5 focused hours by organizing the process from the beginning and reducing interruptions. This timeline does not compromise rigor; instead, it reduces repetition, tab switching, and manual rewriting. Below is a clear workflow that shows where time is saved and explains why.

What tasks are involved in uploading and organizing sources?

Minutes 0 to 20: Upload and Organize Sources

Begin by uploading PDFs, articles, and links into one place. The system automatically deletes duplicates and applies filters based on your criteria. This eliminates the need to manually sort folders and track duplicates, saving 30 to 45 minutes compared to manual processing.

How do you generate structured summaries?

Minutes 20 to 60: Generate Structured Summaries

Summaries can be automatically generated for each source by pulling out key findings, methods, and limitations. Important themes are tagged, which removes the need to manually rewrite highlights. This process saves 60-90 minutes you would otherwise spend rewriting notes. Instead of having to open each PDF again later, the outcome is now searchable, structured insights.

What is AI-assisted abstract screening?

Minutes 60 to 90: AI-Assisted Abstract Screening

The focus is on prioritizing high-relevance papers. Researchers can quickly approve or reject submissions, and borderline cases are marked for more review. A major step removed from the process is reading low-relevance abstracts in full, saving 30 to 50% of screening time. For instance, manual screening of 200 abstracts typically takes about 3 hours; with AI-assisted prioritization, this time reduces to 1.5-2 hours.

How do you perform theme clustering?

Minutes 90 to 120: Theme Clustering

Start by grouping sources based on their main ideas. Identify dominant research patterns and note any differences early on. The restructuring step at the end has been removed, saving about 30 minutes during drafting.

What are comparative insights and their significance?

Minutes 120 to 150: Extract Comparative Insights

Ask questions across multiple sources and compare the methods to identify areas of agreement and disagreement. Instead of switching between multiple PDFs, viewing all sources together makes it easier. This avoids unnecessary changes in focus and saves 40 to 60 minutes.

How do you draft structured sections effectively?

Minutes 150 to 180: Draft Structured Sections

Start with background, findings on themes, gaps, and notes on methods. Using themes that are already grouped makes writing easier. Time saved: 60-90 minutes. This approach turns the task into building rather than exploring. Having organized themes, pulled quotes, structured summaries, and clear inclusion logic helps writing flow smoothly. Without rereading, reorganizing, or missing sources, drafting a mid-size review usually takes 4 to 6 hours, but structured drafting can take only 1.5 to 2 hours.

Many teams handle information gathering with various note apps and manual rewrites, believing this approach is thorough. However, as the number of sources grows and deadlines approach, this approach creates hidden problems. Teams often lose track of where each insight comes from, repeat similar findings, and spend too much time assembling context before they can write clearly. Tools like Otio address this by integrating AI with your own sources, including PDFs, videos, and web links. Instead of switching between tools, gathering information becomes a discussion in one space, shortening drafting time from days to hours while ensuring everything can be traced back. Our structured drafting tools can assist you in this process, streamlining your work.

In the last 60 minutes, focus on checking citations, confirming what should be included, improving logical flow, and making transitions smoother. Since the structure was established early, this final touch-up stage focuses on refinement rather than rebuilding.

What are the differences between traditional and structured workflows?

Traditional manual workflow: screening takes 3 to 6 hours, summarizing takes 4 to 8 hours, organizing takes 2 to 3 hours, and drafting takes 4 to 6 hours. Total: 15 to 25 plus hours, often spread over weeks. In contrast, a structured AI-assisted workflow greatly improves efficiency. Centralization takes 1 hour, screening 1 hour, structuring 1 hour, drafting 1 to 1.5 hours, and polishing1 hour. The total time needed is about 5 hours. Having reliable tools like Otio can further streamline this process, making tasks more manageable. The main difference is not in thoroughness, but in the reduction of work repetition across tasks.

How does structure impact cognitive load?

Fragmentation increases cognitive load, leading to higher switching costs, more re-reading cycles, and delays in formatting. Structured AI workflows minimize the need to switch tools, prevent manual summarization, reduce late-stage reorganization, and eliminate duplicate handling. You're not thinking less; you’re coordinating less. However, structure only works if it is actually built. Most people skip this important step because they don't know where to begin.

Build Your Review Workspace in 10 Minutes

Most systematic reviews stall not because researchers lack rigor, but because they skip the setup. They jump into reading before organizing sources, which guarantees rework. Taking a structured approach requires just 10 minutes at the beginning, eliminating hours of duplication later. Instead of needing more discipline, what's needed is one centralized workspace where AI manages coordination while you focus on thinking. Start by uploading your main papers into a single environment. Avoid using folders or tabs. Use a single workspace where all sources are searchable, summarizable, and queryable in one place. Platforms like Otio allow you to upload PDFs, paste article links, or import video lectures into a single space.

The AI instantly creates structured summaries, extracts key findings, and groups themes without needing manual tagging. By working where your sources already are, you eliminate the context-switching cost that can stretch reviews from days into weeks. The next step is to group by theme rather than by file name. Many researchers organize by author or publication year, which complicates subsequent processing. Thematic clustering uncovers patterns early, allowing you to see which studies agree, which contradict, and where gaps exist before you've read everything twice.

This structure turns into your draft outline. When you're ready to write, you are gathering insights instead of searching for them. This process isn't about working faster by cutting corners; it focuses on eliminating repetitive coordination that slows thorough work. The 10 minutes you spend building the structure upfront save you 15 hours you would otherwise spend reconstructing it later. Once your workspace is set up, every new review can start faster.

Related Reading

How To Write An Executive Summary For A Research Paper

How To Write A Literature Review

How To Write A Research Summary

Best Report Writing Software

How To Use Ai For Literature Review

Document Generation Tools

Best Software For Automating Document Templates

How To Format A White Paper

How To Write A Market Research Report

Best Ai For Literature Review

How To Write Competitive Analysis

How To Write A White Paper

How To Write A Case Study