Video Summarization

6 Best Text Summarization APIs for Fast Summaries

Explore the best Text Summarization API tools for developers. Compare features, pricing, and use cases to find the right fit.

Jan 2, 2026

You just sat through a long training video and now need the key points for your report, but hours of playback stand between you and the deadline. Video Summarization and a reliable Text Summarization API turn transcripts, articles, and meeting notes into concise summaries, key point lists, and action items using NLP, semantic extraction, and machine learning. This guide outlines practical approaches to using extractive and abstractive summarization, topic modeling, and API integration to enable faster AI-driven writing and research. Want to stop scrolling and start producing useful drafts in minutes?

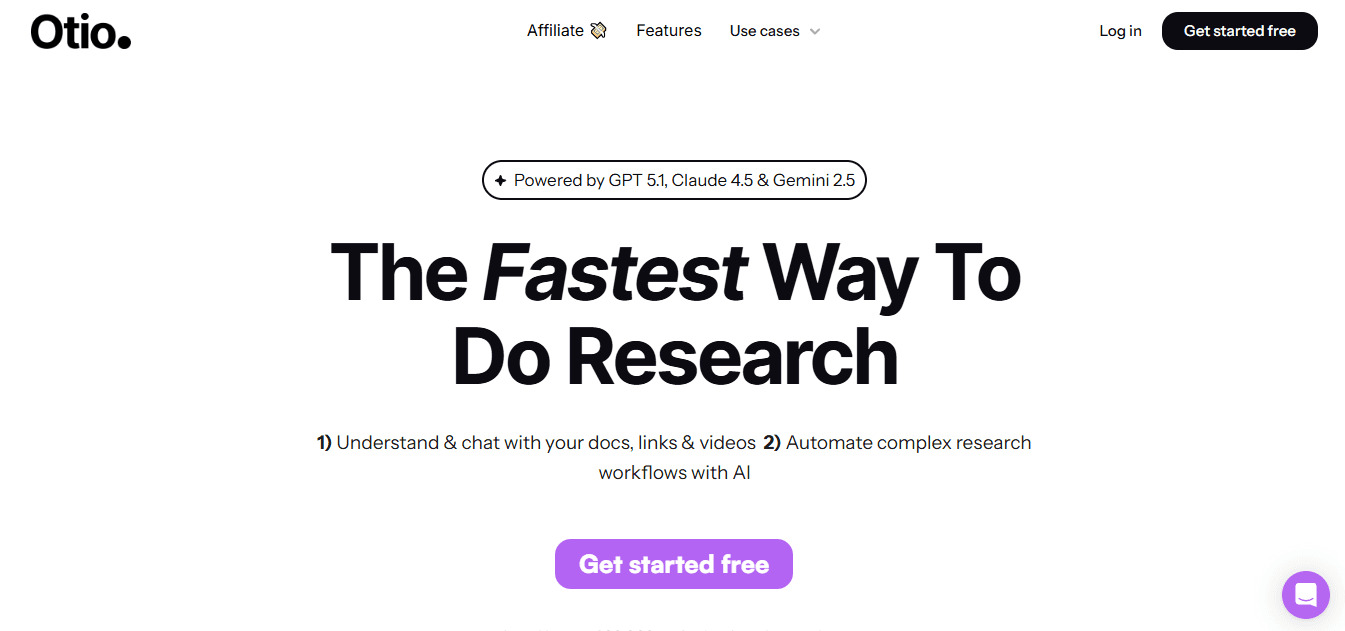

Otio is an AI research and writing partner that turns transcripts and documents into clear summaries, meeting notes, and focused outlines so you can write and research fast with AI.

Summary

Manual summarization is a significant bottleneck, with estimates of up to 30 minutes per page, making continuous ingestion and rapid iteration impractical as document volumes grow.

Human summaries score well on average but risk costly omissions; manual summaries often achieve over 90 percent accuracy, while rare dropped clauses or misread causal language can derail decisions.

APIs deliver predictable throughput and latency, and 80 percent of businesses report improved efficiency after implementing text summarization APIs.

Adoption yields measurable productivity gains: companies see about a 30 percent increase with summarization APIs, and 85 percent of professionals report that AI summarization tools significantly enhance productivity.

Summarization significantly reduces consumption and increases engagement, with reading time reductions of up to 50 percent and over 70 percent of users finding summarized content more engaging.

Vendor and model choices matter, since procurement weights cost at 64 percent, quality and performance at 58 percent, and accuracy at 47 percent. In comparison, models with over 30 billion parameters can deliver roughly a 25 percent improvement in accuracy.

This is where Otio's AI research and writing partner fits in: it addresses these trade-offs by exposing configurable extractive and abstractive modes, length and tone controls, and source-linked outputs for integration into search, routing, and analytics pipelines.

Table of Contents

Challenges of Manual Text Summarization

Why Summarize Text Using an API

6 Best Text Summarization APIs for Fast Summaries

How to Choose the Best Text Summarization

Build Smarter Text Summarization Into Your Product With Otio’s AI APIs

Challenges of Manual Text Summarization

Manual text summarization struggles in five concrete ways that make it expensive, uneven, and risky at scale: individual judgment skews priorities, the work eats time, mistakes slip through, volume breaks the process, and outputs drift across authors and contexts. Each problem affects how usable a summary is for search, support, analytics, or decision-making.

1. Subjective judgment and bias

Subjectivity occurs when different readers interpret the same document as having different purposes, so one summary highlights arguments while another focuses on numbers. This pattern appears across support queues and product research notes: different people highlight different details, which fragments what teams rely on as a single source of truth. It is exhausting when stakeholders argue over which “important” line was omitted, because the disagreement is not about facts but about emphasis and priorities.

2. Time and effort

Manual summarization requires focused attention and review, making it a scheduling problem rather than just work. Abstractive Text Summarization including State of the Art, Challenges, and Improvements, 2024-09-04. It is estimated that manual text summarization can take up to 30 minutes per page, which explains why teams stall when documents exceed a few pages; what looks like a small batch of articles suddenly consumes full days of engineering or analyst time. That labor cost makes fast iteration and continuous ingestion impractical for production pipelines.

3. Human mistakes and omissions

Humans are accurate overall, yet they miss edge cases and subtle dependencies that matter in product or legal contexts. Abstractive text summarization, including state of the Art, Challenges, and Improvements, 2024-09-04. Human evaluators often rate manual summaries above 90%, indicating that manual work can be high quality, but the high average masks hard failures: dropped clauses, misread figures, or misunderstood causal language that can lead to bad decisions. Overall, accuracy is good, but rare errors are costly.

4. Scalability limits

Manual processes do not scale smoothly; they degrade. What works for a handful of brief breaks when your backlog grows to hundreds of documents per week, quality control becomes a full-time job. It is like trying to empty a flooding room with a teaspoon: effort increases linearly while velocity does not. As volume grows, bottlenecks shift from writing to coordination, review, and rework.

5. Inconsistent voice, structure, and scope

When multiple people summarize the same class of content, you get varied lengths, tones, and signal-to-noise. That inconsistency undermines automation: search ranking, help-desk routing, or analytics that expect uniform fields stumble on variable summaries. The emotional cost is real: teams lose confidence and revert to re-reading the originals instead of trusting their summaries, which defeats the purpose of summary workflows.

Most teams handle this by assigning writers or piling manual review into existing roles, because that feels familiar and keeps control close. As slices of text multiply and deadlines tighten, that familiar approach fragments work across people and tools, response times lengthen, and decision friction increases. Platforms like Otio provide configurable summarization controls, domain-aware models, and enterprise-grade privacy, compressing review cycles while producing predictable, production-ready outputs that teams can plug into search and support. That solution sounds tidy, but the real question is how you turn summaries from nice-to-have notes into decision-grade data that your apps can rely on.

Related Reading

Why Summarize Text Using an API

APIs for text summarization turn human work into predictable, automatable outputs you can wire directly into products and processes, saving time while preserving signal. They are not a novelty; they are infrastructure that compresses long-form text into actionable snippets that search, support, analytics, and downstream apps can consume reliably.

1. Faster throughput and predictable latency

Automated summaries let teams move from episodic manual edits to steady, measurable throughput. When you call an API, you get consistent response times, configurable summary lengths, and deterministic output formats that integrate with CI/CD pipelines, cron jobs, or serverless lambdas. According to Tech Research Group, 80% of businesses report improved efficiency after implementing text summarization APIs published in 2023. That adoption signal shows that efficiency gains translate into daily operational wins, such as shorter review cycles and fewer blocked releases.

2. Better, faster research signal

Summaries surface the evidence you need without hunting through noise, so analysts and journalists can form hypotheses and test them sooner. An API can return extracted dates, named entities, and bulletized arguments alongside an abstractive overview, shortening the feedback loop between discovery and decision. Teams that accept structured summary payloads can run automated tagging, trend detection, and citation linking in minutes rather than hours.

3. Improved content curation at scale

Curators and aggregators need consistent outputs so readers receive coherent digests across multiple sources. When summaries come from the same API with configurable tone and length, curation becomes a sorting-and-ranking problem, not a rewriting task. Think of it as an assembly line where each article feeds a template that downstream ranking and personalization systems understand, so editorial teams spend energy on selection criteria rather than rewriting every blurb.

Most teams handle curation with ad hoc scripts, spreadsheets, or manual triage because those methods feel low-friction and familiar. That familiarity masks a growing cost, as the number of feeds and formats increases and routing logic breaks down. Platforms like Otio provide production-ready summarization with precise controls for length, extractive versus abstractive mode, and domain tuning, enabling teams to replace brittle scripts with a stable API that scales without increasing headcount.

4. Faster personalization and tighter product feedback loops

Summaries let product teams and marketers extract themes from reviews, tickets, and comments in near-real time, enabling them to act on customer voice. This is where I see most skepticism: teams resist paying for automated summaries when manual skimming seems faster, and they resent AI being applied where it adds no measurable value.

That objection is legitimate when the output is noisy or one-off. The counter is discipline: adopt a configurable API that returns structured signals you can evaluate automatically, then run short A/B tests to measure impact before you commit budget. According to the Enterprise AI Journal, Companies using summarization APIs see a 30% increase in productivity (2023). Teams that measure this way tend to convert skeptics into advocates because productivity gains become quantifiable.

5. Global scale without hiring translators

Modern summarization APIs support multiple languages and can normalize content into a single language or produce multilingual summaries, making multilingual content ingestion operational rather than a hiring bottleneck. That unlocks regional analytics, faster moderation across markets, and unified knowledge bases for global support teams. Treat the API as a language-agnostic preprocessor that normalizes inputs so downstream models and human reviewers work from the same condensed truth.

A short analogy to make this concrete

Summaries are the triage nurse of information, quickly routing what needs intervention, what needs monitoring, and what can be archived. When triage happens through an API, you stop asking people to be triage nurses 24 hours a day and instead ask them to solve the problems triage flagged. What this lets you do next is where the subtle power lives, and it is not apparent until you try linking summaries into search, routing, and analytics pipelines. The next difficulty is more revealing than the first set of fixes we just applied.

6 Best Text Summarization APIs for Fast Summaries

These six services represent the practical choices you will make when you need production-ready summarization, each tailored to input type, configurability, and deployment needs. Choose based on the data sources you ingest, the output shape you require, and the level of control you need over tone and format. Below, I list each option with what it actually buys you in production, reworded and focused on actionable differences.

1. Otio

Otio is built for heavy information workflows, a single AI-native workspace where you consolidate articles, PDFs, research papers, tweets, and even video transcripts into one place and get summaries that stay tightly linked to sources. Expect source-grounded abstracts, interactive Q&A on documents and knowledge bases, and controls for summary length and emphasis so teams can extract facts or arguments as needed. Where teams struggle with fragmented notes and manual triage, Otio provides configurable summary styles, citation-aware outputs, and the ability to interrogate a corpus as if you had a search-trained research assistant.

2. AssemblyAI’s summarization models

AssemblyAI focuses on audio-first summarization, turning transcripts into multiple summary styles: concise headlines, paragraph overviews, bulletized lists, or single-sentence takeaways, plus domain-tuned variants for informative, conversational, or attention-grabbing tone. Its LLM gateway lets product teams feed transcript data into a large language model with custom prompts, making it simple to shape outputs into any format your app expects. Unique operational features include Auto Chapters, which segment audio into time-stamped chapters and return a short paragraph plus a headline for each segment, a useful pattern if you publish long-form podcasts, meeting recordings, or lecture archives.

3. plnia Text Summarization API

plnia is a straightforward document summarizer designed for developers seeking a compact integration and predictable pricing. It handles static text and pairs summarization with additional natural language processing (NLP) endpoints, such as sentiment scoring and keyword extraction, making it useful when you need a summary with metadata in a single call. Developers can trial the service for 10 days, then continue on an entry-level plan starting at a modest monthly rate, making initial experimentation low-friction.

4. Microsoft Azure Text Summarization (Text Analytics)

Azure packages extractive summarization inside its broader Text Analytics suite, which is appealing when you already run workloads in Azure and want a managed, pay-as-you-go service tied to the cloud provider’s compliance and identity controls. Setup assumes an Azure subscription and familiarity with Visual Studio tooling, and pricing scales with usage. Choose this when you need tight cloud integration, role-based access, and enterprise support without building your own transcription and orchestration layers.

5. MeaningCloud Automatic Summarization

MeaningCloud emphasizes extractive synopsis generation across multiple languages, extracting the most relevant sentences to assemble a concise synopsis. It is a practical choice for multilingual ingestion where you want the original phrasing preserved, for example, in legal or customer feedback workflows that require verifiable quotes. Developer sign-up is free for testing, with pricing tiers that scale from zero to enterprise levels based on throughput and features.

6. NLP Cloud Summarization API

NLP Cloud offers both hosted summarization endpoints and the option to fine-tune or deploy community models, so you can start with a managed API and later bring customized models into production. That path suits teams that plan to iterate on domain tuning, requiring model deployment, versioning, and the ability to push a tuned model into a predictable API surface. Pricing varies by usage, with entry tiers for prototypes and higher tiers for steady production traffic.

How do I choose between audio-first, extractive, or fine-tunable APIs?

This decision often comes down to a simple constraint: if your source is spoken word and timestamps matter, prioritize audio-native models; if you need verbatim evidence and legal traceability, prefer extractive tools; if your domain uses specialised terms, pick an API that supports model fine-tuning or custom prompts. The tradeoff is usually speed versus fidelity, and the right pick depends on whether you prioritize immediate throughput or domain-aligned accuracy.

A typical adoption pattern I see across product research and support teams is hesitation when outputs lack configurability. Choose tools that expose length, tone, and mode as first-class options rather than hidden parameters. If an API only returns one-size-fits-all prose, teams stall; when they can set extractive versus abstractive modes and control verbosity, adoption accelerates because reviewers trust outputs more quickly.

Most teams handle this by sticking with familiar workflows until the cost becomes obvious, then patching in ad hoc scripts. That familiar approach breaks down as sources multiply and real-time needs appear, revealing the hidden cost of slow insights. Teams find that platforms like Otio centralize collection, provide domain-aware summarization, and deliver structured outputs you can route into search, tickets, and analytics, compressing review cycles while preserving provenance.

Practical differences that matter in production

Input flexibility

Does the API accept transcripts, raw audio, PDFs, or HTML? If you need multimedia ingestion, choose a provider with native transcript handling and chaptering.

Output shape

Do you require bullets, headlines, or structured JSON with extracted entities? Select a service that delivers consistent payloads for your pipeline.

Control surface

How many knobs for length, tone, and extractive versus abstractive are exposed programmatically? The more control, the easier it is to standardize downstream consumers.

Deployment and privacy

Does the provider offer on-prem or VPC options, and what are the SLA and retention policies? For compliance-sensitive workflows, those answers determine vendor suitability.

Cost predictability

Look for pay-as-you-go with transparent per-unit pricing or tiered plans with included quotas, because hidden charges on long transcripts or high concurrency can surprise budgets.

Implementation note, practical cue

Think of selecting a summarization API like choosing a lens for a camera: some lenses are wide and fast, giving a broad sense quickly; others are telephoto, precise, and require more setup. Match the lens to the shot. Also, Text summarization can reduce reading time by up to 50%. (Eden AI, 2025) That efficiency matters because over 70% of users find summarized content more engaging.

Curiosity loop

The choice looks tactical now, but what subtle tradeoffs will actually decide which API wins for your team?

Related Reading

Best YouTube Summarizer

How to Choose the Best Text Summarization

Start by matching the summarization style to the job you need it to do. Pick the method that meets your fidelity, latency, and integration constraints, then validate with short production tests tied to clear KPIs. Use vendor selection criteria that weigh cost, quality, and accuracy against deployment and privacy needs so your choice fits both product and operational requirements.

1. Decision criteria you must lock down first

List the KPIs before you try vendors, because every tradeoff becomes real in production. Ask: what accuracy level moves a decision, what latency is acceptable, who will consume the output, and which formats are required by downstream systems. Remember that when teams evaluate vendors, cost, quality, performance, and accuracy are the dominant factors. Product teams prioritize cost at 64 percent, quality and performance at 58 percent, and accuracy at 47 percent. Use those weights to score offerings during procurement.

2. What kind of content are you summarizing

Classify your inputs by source, length, and noise: legal PDFs, structured reports, meeting transcripts, support tickets, and multilingual social media comments all impose different requirements. If transcripts include speaker overlap and timestamps matter, you need audio-aware tooling; if your corpus is legal text, preserve sentence boundaries and citations. Map formats to output the shapes you actually need, such as JSON bullet lists for search or plain-text headlines for UI cards.

3. When to choose extractive summarization

Choose extractive methods when you need verbatim evidence, reproducibility, and minimal risk of introduced falsehoods. Extractive summarization is the right choice for compliance, audit trails, or workflows that require traceable quotes, as it preserves the original sentences and their provenance. Use extractive as the backbone of any pipeline that feeds search indexes, citation stores, or legal review systems.

4. When to choose abstractive summarization

Choose abstractive models when readability and conversational flow matter more than literal fidelity, for example, in executive briefs, consumer-facing digests, or social post generation. Abstractive methods condense ideas into fluent prose, handle speaker attribution in meetings, and generate multiple output formats, including headlines, bullet points, and narratives. Still, they need stronger monitoring to catch creative paraphrases.

5. Use hybrid approaches when one method alone fails

If your system needs both evidence and readability, combine them: extract the top-k sentences, then run a constrained abstractive pass that rewrites only those snippets or annotates them with source links. Expose mode toggles in the API so product teams can pick extractive for legal review and abstractive for UX. This gives you the best of both worlds without forcing a single one-size-fits-all pipeline.

6. How to evaluate models and architecture at scale

Measure accuracy, latency, and cost under realistic loads, not toy samples. Bigger models often raise accuracy, but they also increase inference cost and latency. For accuracy-sensitive tasks, invest in larger-capacity models, noting that SiliconFlow, summarization models with over 30 billion parameters, show a 25% improvement in accuracy (SiliconFlow, 2025), which means model capacity can materially lift domain performance when tuned plan capacity correctly by testing end-to-end throughput and by profiling cold-start versus steady-state latency.

7. Integration and deployment constraints to inspect

Check API ergonomics, supported input types, and deployment options (e.g., VPC or on-premises), as privacy rules will determine which vendors are eligible. Prefer services that return consistent JSON shapes you can validate in CI, and that offer versioned models and predictable retention policies. If you need deterministic outputs for automation, insist on programmatic controls for length, tone, and extractive versus abstractive mode.

8. Measuring adoption and actual productivity gains

Operational adoption stalls when outputs are unpredictable. Run short A/B tests that measure review time, correction rates, and downstream action rates, not just subjective satisfaction. That matters because most professionals report real workplace benefits from these tools. For example, the ONES.com Blog reports that 85% of professionals believe AI text summarization tools significantly enhance productivity, supporting the use of concrete, short experiments to quantify ROI before committing to broad rollouts.

9. Cost modeling and budget guardrails

Build a cost model that includes tokens per document, average concurrency, and expected re-runs during tuning. Ask vendors for predictable pricing tiers and simulate peak monthly usage to avoid unexpected overages. Also account for human review costs, because higher accuracy often reduces review burden, which feeds back into your total cost of ownership.

10. Governance, monitoring, and feedback loops

Instrument drift detectors, hallucination checks, and a simple human-in-the-loop escalation path so you can catch and correct failures before they impact decisions. Track per-document correction rates and set automatic rollbacks or alerts when those rates exceed thresholds. Use structured feedback to retrain or retune models and to refine prompt templates or extraction heuristics.

11. Testing checklist before production cutover

Run these checks on any candidate: reproducibility on identical inputs, variance across paraphrases, hallucination frequency on domain content, and performance under expected concurrency. Include clear acceptance criteria tied to product outcomes, like reducing analyst review time by X percent or maintaining legal traceability for Y percent of documents.

Most teams handle summarization by bolting a single model into an app because that is fast and familiar, which works early on. As usage grows, this approach creates hidden costs: inconsistent output quality, increased manual checks, and brittle scripts that break when content types change. Teams find that platforms like Otio centralize ingestion, expose length and mode controls programmatically, and provide domain-aware models plus deployment options, compressing review cycles while preserving provenance and predictability. Choose the shortest, most repeatable experiment that proves the method for your highest-risk use case, then expand from there. That simple decision feels complete until you discover the single integration choice that ultimately determines long-term reliability and scalability.

Build Smarter Text Summarization Into Your Product With Otio’s AI APIs

Let’s stop letting documents slow decisions and run a short pilot with Otio’s text summarization API so you can see how consistent, source-aware summaries move work from sifting to deciding. Start with one pipeline and a single acceptance metric, measure the drop in review effort, and scale the integration once it has proven to preserve provenance and accelerate outcomes.